Abstract

Reinforcement learning has achieved great success in many applications.

However, sample efficiency remains a key challenge, with prominent methods requiring millions (or even billions) of environment steps to train.

Recently, there has been significant progress in sample efficient image-based RL algorithms;

however, consistent human-level performance on the Atari game benchmark remains an elusive goal.

We propose a sample efficient model-based visual RL algorithm built on MuZero, which we name EfficientZero.

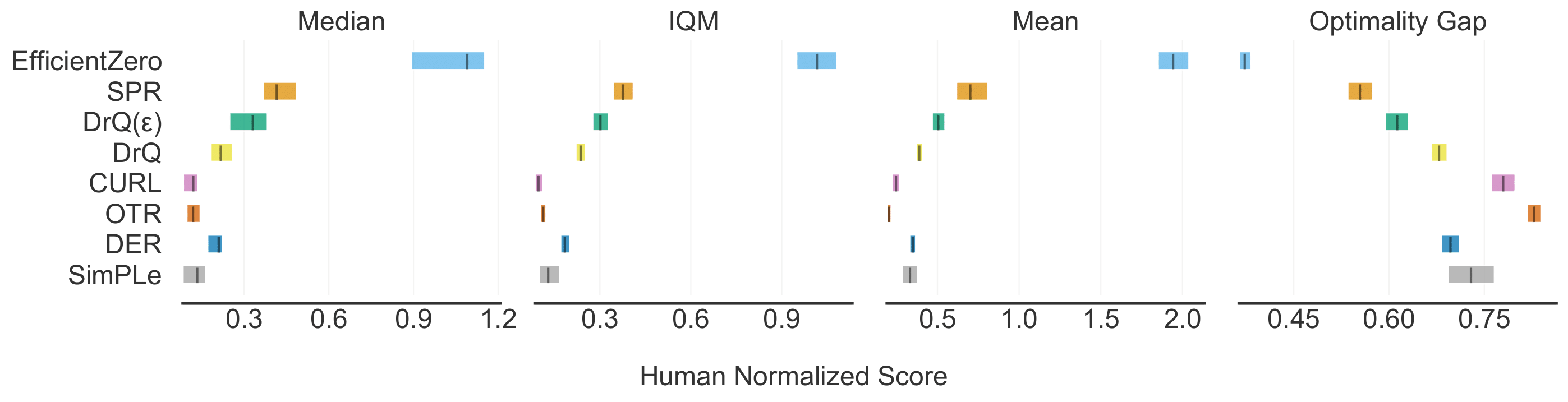

Our method achieves 194.3% mean human performance and 109.0% median performance on the Atari 100k benchmark with only two hours of real-time game experience and outperforms the state SAC in some tasks on the DMControl 100k benchmark.

This is the first time an algorithm achieves super-human performance on Atari games with such little data.

EfficientZero’s performance is also close to DQN’s performance at 200 million frames while we consume 500 times less data.

EfficientZero’s low sample complexity and high performance can bring RL closer to real-world applicability.

We implement our algorithm in an easy-to-understand manner and it is available at here. We hope it will accelerate the research of MCTS-based RL algorithms in the wider community.

EfficientZero

Our proposed method is built on MuZero. We make three critical changes:

- Self-supervised learning to learn a temporally consistent environment model (more supervision)

- Learn the value prefix in an end-to-end manner (less aleatoric uncertainty)

- Use the learned model to correct off-policy value targets (less off-policy issue)

Please refer to the paper for more details.

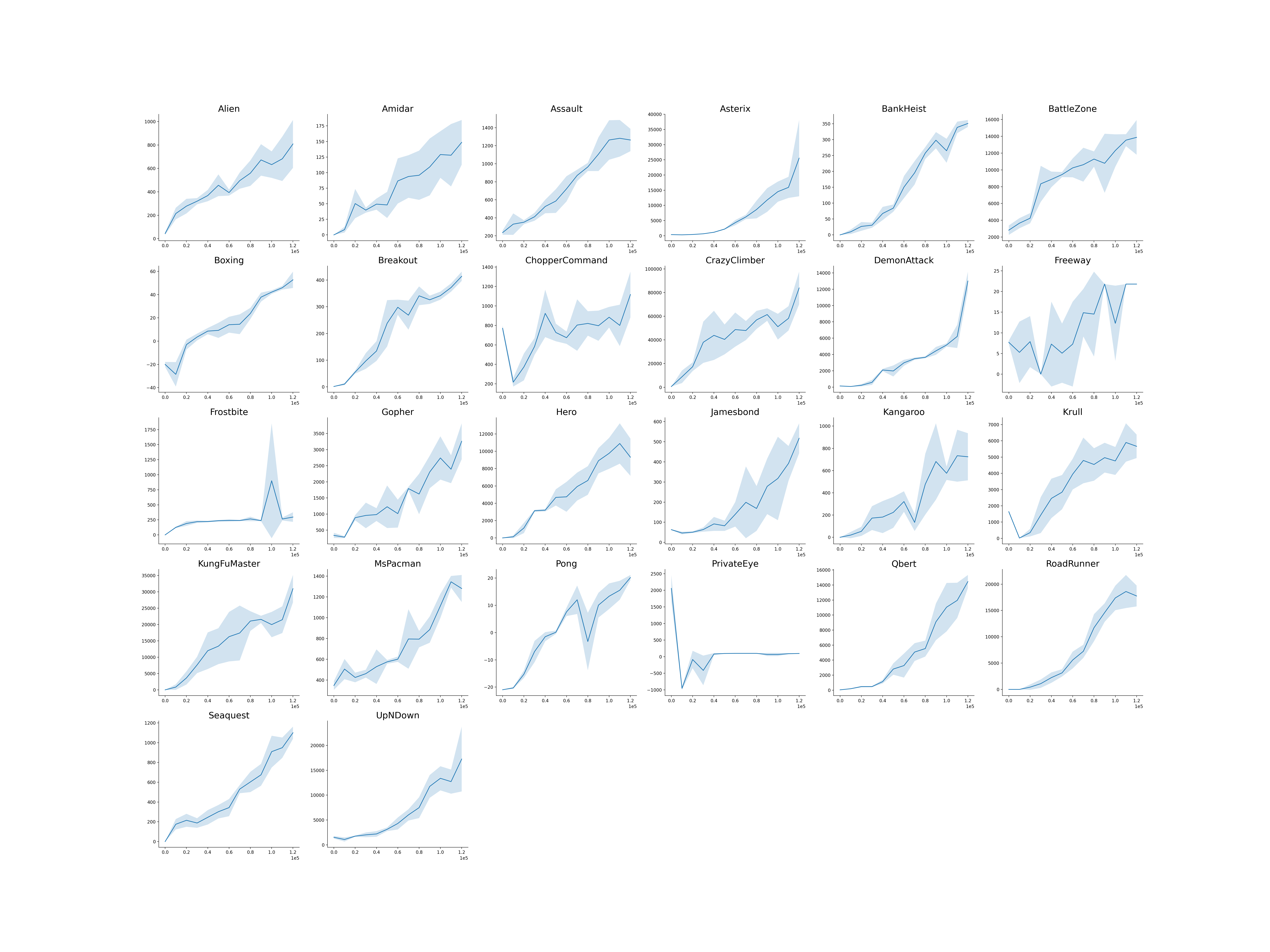

Training curve

Evaluation with 32 seeds for 3 different runs (different seeds).

IQM, Optimality Gap, Median, Mean from rliable.

Some Video Results (100k environment steps data)

Pong Breakout Asterix

Qbert CrazyClimber RoadRunner

Citing

To cite this paper, please use the following reference:

@inproceedings{ye2021mastering,

title={Mastering Atari Games with Limited Data},

author={Weirui Ye, and Shaohuai Liu, and Thanard Kurutach, and Pieter Abbeel, and Yang Gao},

booktitle={NeurIPS},

year={2021}

}